The evolution of the Security Operation Center (SOC)

Security Information and Event Management Platforms (SIEM) have gradually become the center of SOC operations. They create different and relevant security detections/alerts that security analysts have to evaluate. Usually, a tier 1 analyst performs the initial triage of SIEM alerts and escalates high-priority ones to tier 2 or tier 3 for more thorough threat hunting. Tier 2 and 3 analysts will use additional tools such as centralized log management and analytics platform to hunt for anomalous activity that could date back months. The goal is to identify which actions the SOC team should take now, how to stop a breach and how to prevent one in the future.

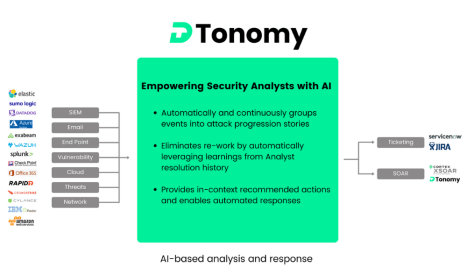

To assist the SOC team in quickly investigating and responding to security issues, new tools like Security Orchestration, Automation, and Response (SOAR) assist security analysts on a daily basis to automate repetitive tasks. While it is great to automate lots of low-level tasks and has great potential, it also comes with a few challenges. Primarily, security analysts have to know what to automate. For example, “if there are too many brute force logins, then lock down account” is not effective automation. Analysts are reluctant to automate these responses because they lack confidence in the analysis and subsequent automation activity.

The gap between Detection and Response

The gap between detection and response characterizes the manual effort required by security analysts to investigate each alert in order to either dismiss it as false positive or consolidate the details of an attack to remediate it accurately.

When a security alert arrives, a security analyst will ask…

- Is it real?

- What is the impact?

- How to clean it up if it is real?

- How to prevent it if it is a false positive?

- Is it worth automating it and how to automate it?

- Is it safe to automate?

- How did it happen?

Security teams are constantly facing these challenges. Unfortunately, their ability to review each alert and fully investigate potential threats is limited by the time-consuming effort that each alert requires. Consequently, security teams ignore early threat activity, only triaging high priority alerts which increases the risk of missing attacks. What’s more, in a recent report by Forrester Research, on average, internal security operations teams receive over 11,000 alerts per day and more than 50% are false positives. Analysts’ time is wasted chasing “false positives” and manual operations. It causes “alert fatigue,” a common experience in SOC centers.

Measure the damage of Alert fatigue

Many companies and organizations have experienced alert fatigue. What is the right way to measure the damage properly? Here are a few factors that we should take into consideration when measuring the damage caused by alert fatigue.

- Ignore alerts that are true positives

If breached, the average cost of an incident is around $3.86 million dollars according to a report from IBM. Not to mention the Kaseya hackers demanded $70 million ransom and Colonial Pipeline paid a $5 million ransom to the attackers.

- Lost man-hours: your security analyst

You can easily calculate the wasted time on investigating false positives which could be spent on important tasks instead. The more time they spend fixing false alerts, the less your organization benefits from their talent and experience.

- Employees burn out

Eventually, employees may wear out due to the number of alerts they need to address. When employees burn out, it could lead to more errors and more missed alerts. If more employees burn out, it could significantly impact employee retention and company culture.

How AI-based Security Analysis and Response can help:

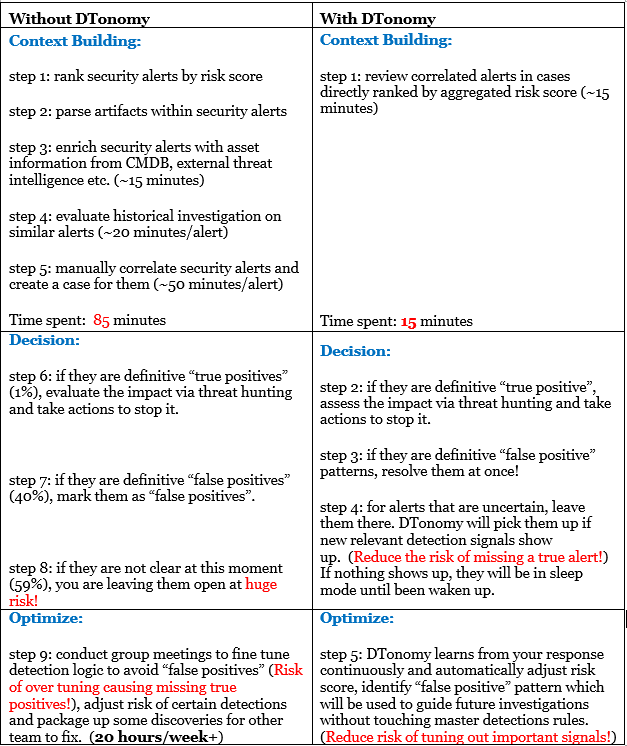

DTonomy invented the solution to enable security analysts to reach a conclusion on false-positive quicker and reduce the risk of missing true positives on your existing security detections. How does it work? Let’s do a side-by-side comparison.

Get answers to “false positives” faster.

- When you identify security alerts as “false positives”, DTonomy learns patterns in your responses and continuously validates them against more evidence. For example, a pattern that could be detected from IP 2.3.4.5 is noticed to be “false positive” with 100% confidence.

- For incoming alerts, DTonomy’s pattern engine automatically identifies patterns among security alerts so you can identify offensive detection rules quickly. For example, a spiking number of alerts related to ‘Machine_A’ show up within a short period of time. DTonomy AI engine enables you to spot this type of pattern quickly and determine the root cause more easily.

- Each case is ranked with an aggregated score from security alerts. The risk score of individual alerts is updated intelligently when you resolve it as either “true positive” or “false positive”. So, the risk score is totally personalized to your environment and gives you a more accurate representation of your risk.

Reduce the risk of “false negative”

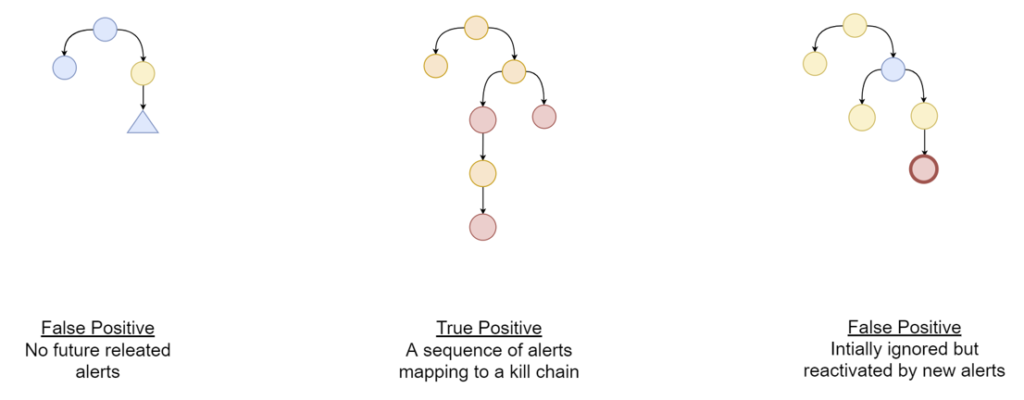

- Even if certain alerts are mislabeled as “false positives”, do not worry. Our system will not filter out those alerts. Instead, we continue to monitor them and connect them with new detections that may lead to strong evidence for a “true positive” determination for a group of alerts.

Get a definitive answer on “false positive”

- As new detections arrive, our pattern always looks back to historical alerts to see if they are connected within a pattern. If no new alerts link to an old pattern, all the alerts in the old pattern can be safely considered as “false positives” as they are not likely risky or strong evidence of an attack.

Fit into your current investigation workflow

Instead of replacing existing threat detection capabilities and workflows, DTonomy has integrations with SIEM and SOAR platforms. DTonomy complements these tools and your log analytics solution used for threat hunting so that it fits into your environment seamlessly.

By seamlessly fitting into existing alert triage and threat hunting/investigation workflows, DTonomy bridges the gap between detection and response, saving security analysts time and augmenting their existing workflows.

Additional resources:

Check out the Blog: Addressing Noisy Security Detections — A Complete Solution

Check out the DTonomy’s AI Engine: DTonomy AIR Engine

Check out the Blog: Security Alert Fatigue

Join Slack Channel to learn more.